Like for many scientific disciplines, transparency and openness are essential to the credibility of economics research. This is especially true given that economics research informs economic policy decisions. Indeed, in recent years, governments and policy institutions have pushed for “evidence-based” policy-making. They strive to base their policies on academic research, hence the need for economics research to be trustworthy for the policies to be credible. To be trustworthy, research needs to be reproducible.

The peer review process in economics journals ensures that original research is published and provide a stamp of “high quality”. However, editors and referees do not have the obligation to check that “same data and same code give the same results”. Hence, the referee process does not provide any feedback on the reproducibility. In an effort to foster reproducibility, journals have put in place data availability policies (DAP henceforth) as early as 1933 with Econometrica. Other journals followed quite early as well, such as the Journal of Money, Credit and Banking in the late 1990’s. The first “top 5” journal, the American Economic Review, introduced a DAP in 2005, with the Quarterly Journal of Economics following in 2016. As of 2017, only 54% of 343 economic journals of the Thomson Reuters Social Science citation index had a DAP (Höffler 2017).

In this study, we aim to test whether data availability policies with light enforcement, such as the one enforced by the AEA in 2005, yields reproducible results. While there is no systematic check of the codes with such policies, making codes and data publicly available should in theory enhance transparency. Providing undergraduate students only with the code, data and information provided by the authors, we verify if they successfully replicate the results in the paper.

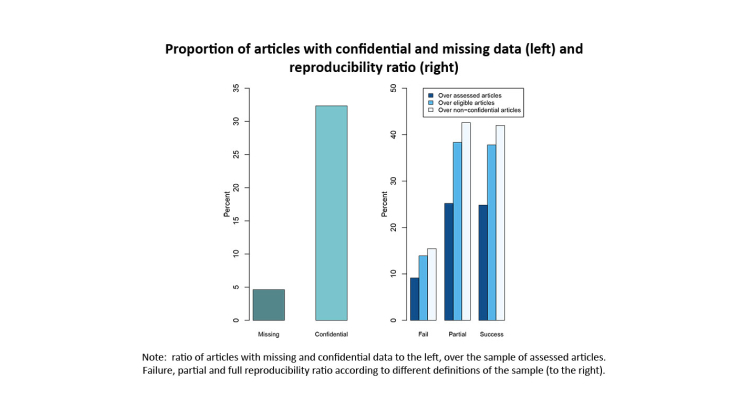

First, we find a moderate replication success, in spite of a data availability policy. When considering the sample of assessed articles, only 25% were successfully replicated, rising to 38% when considering eligible papers only (those for which authors did not specify using confidential data). Conditional on non-confidential data, we find a higher reproducibility rate of 43%. Such low reproducibility is driven by confidential or missing data. Even restricting on non-confidential data, our analysis shows that a substantial numbers of articles had different results than those obtained from their replication packages. Secondly, we show that even reproducible articles required complex code changes to reach similar results as in the paper. Our analysis suggests that data availability policies are necessary but not sufficient to ensure full replicability.

Most importantly, we further show that replicated papers do not generate a citation bonus. Increased citations may thus not be enough of an incentive device to reach transparent documentation and coding practices. Our analysis therefore calls for a systematic check of articles during the referee process, as a way to reach a “good reproducibility” equilibrium.